Explainable and Generalizable AI with Attention + Reasoning + Language

|

We are interested in AI systems that perceive, learn, and reason to perform real-world tasks. We aim to develop such systems that are explainable, generalizable and trustworthy. Below are a few current topics:

|

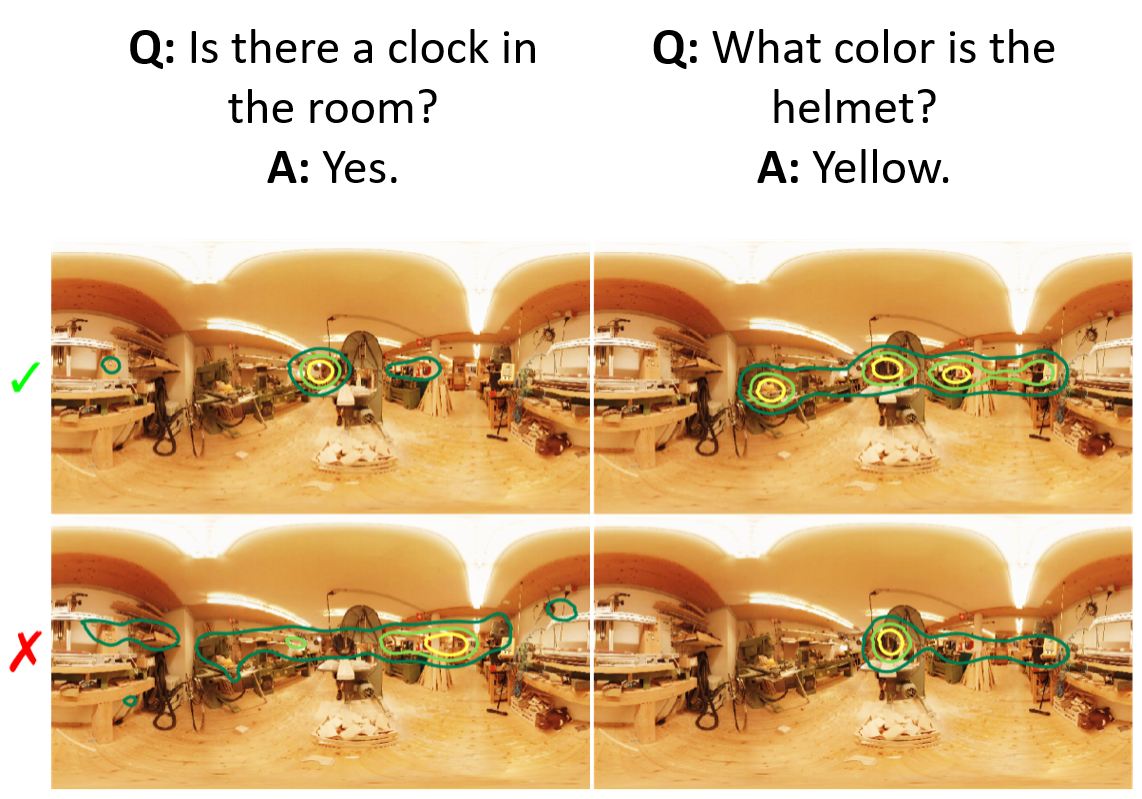

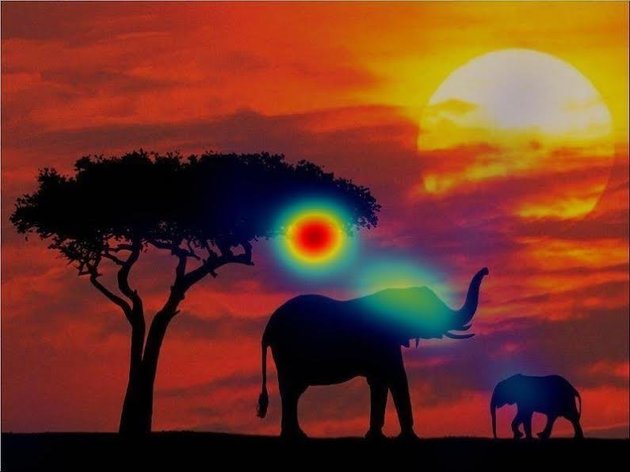

SALICON: Data-driven Approaches to Saliency Research

|

|

We study saliency and make a number of data and model contributions to unaddressed issues in saliency

research (see

here

for details).

SALICON

is an ongoing effort in understanding and predicting attention in complex natural scenes with big data

paradigm and data-driven approaches. So far we

have: |

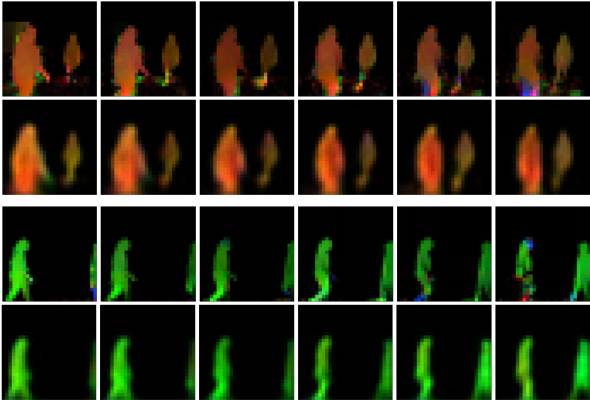

Deep Learning and Unsupervised Representation Learning

|

We propose a number of theoretical and application innovations in machine learning methods. We study

methods to make deep neural networks more efficient, generalizable, and trustworthy. We develop

representations and architectures for visual, behavioral and neural data, addressing

domain-specific needs and overcoming their challenges. We are also interested in visualizing and

interpreting deep neural networks. |

Artificial Intelligence for Mental Health

|

We develop neural networks and other AI technologies to understand neurodevelopmental and

neuropsychiatric disorders for automatic screening and improved personalized care. For example,

atypical attention and behaviors play a significant role in developing impaired skills (e.g., social

skills) and reflects a number of common developmental / psychiatric disorders including ASD, ADHD, and

OCD. Our study integrates several techniques including behavioral, fMRI and computational modeling to

characterize the heterogeneity in these disorders and to develop clinical solutions. We are lucky to

work with leading scientists and clinicians including

Ralph Adolphs,

Jed Elison,

Suma Jacob,

Christine Conelea,

Sherry Chan, and

Kelvin Lim. |

Artificial Intelligence for Neural Decoding

|

We are interested in areas bridging artificial intelligence and human functions. We have developed a

neural decoder to infer human motor intention based on peripheral nerve neural recordings and

demonstrated the first 15 degree of freedom motor decoding with amputee patients. It has also shown an example of true bi-directional human-machine interface with mutual learning and adaptation: the human learns over time to better express their intent to mind-control prosthetic hand movement, and the machine learns from the choices and refined the model. We collaborate with

talented engineers, scientists, and clinicians on this exciting topic:

Zhi Yang,

Edward Keefer, and Jonathan Cheng. |