Abstract

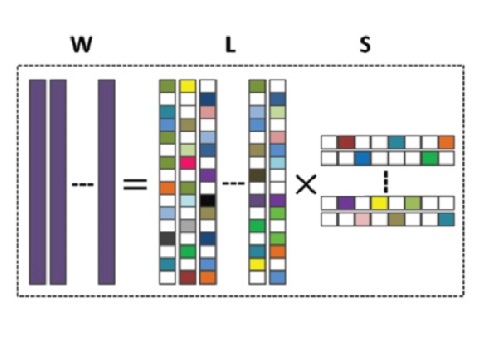

In this work, we investigate knowledge sharing across categories for action recognition in videos. The motivation is that many action categories are related, where common motion patterns are shared among them (for example, diving and high jump share the jump motion). We propose a new multi-task learning method to learn latent tasks shared across categories, and reconstruct a classifier for each category from these latent tasks. Compared to previous methods, our approach has two advantages: (1) The learned latent tasks correspond to basic motion patterns instead of full actions, thus enhancing discrimination power of the classifiers. (2) Categories are selected to share information with a sparsity regularizer, avoiding falsely forcing all categories to share knowledge. Experimental results on multiple public data sets show that the proposed approach can effectively transfer knowledge between different action categories to improve the performance of conventional single task learning methods.

Resources

Paper

Qiang Zhou, Gang Wang, Kui Jia, and Qi Zhao, "Learning to Share Latent Tasks for Action Recognition," in IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 2013. [pdf] [bib]

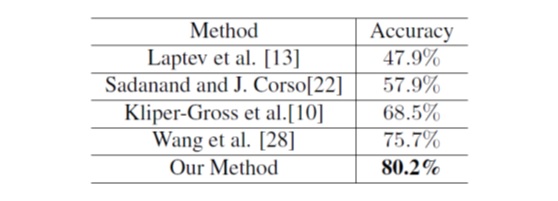

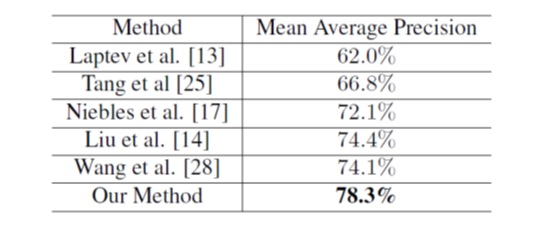

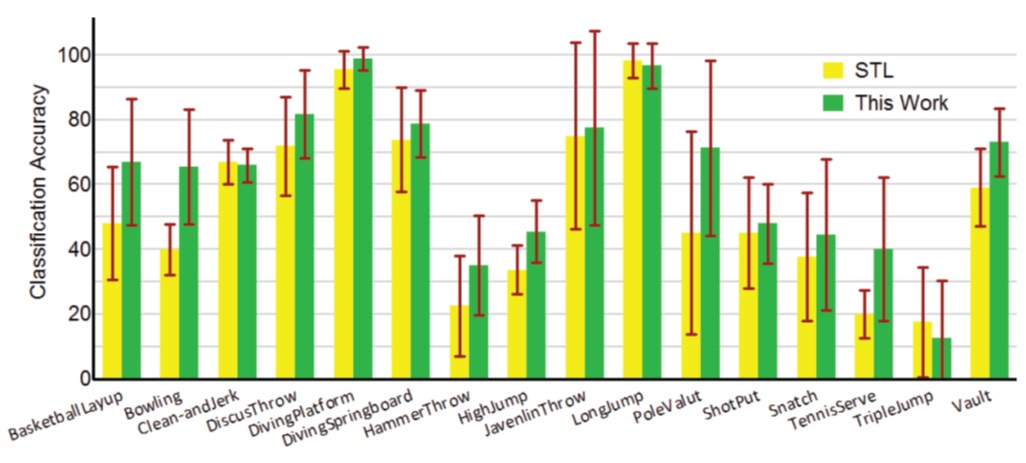

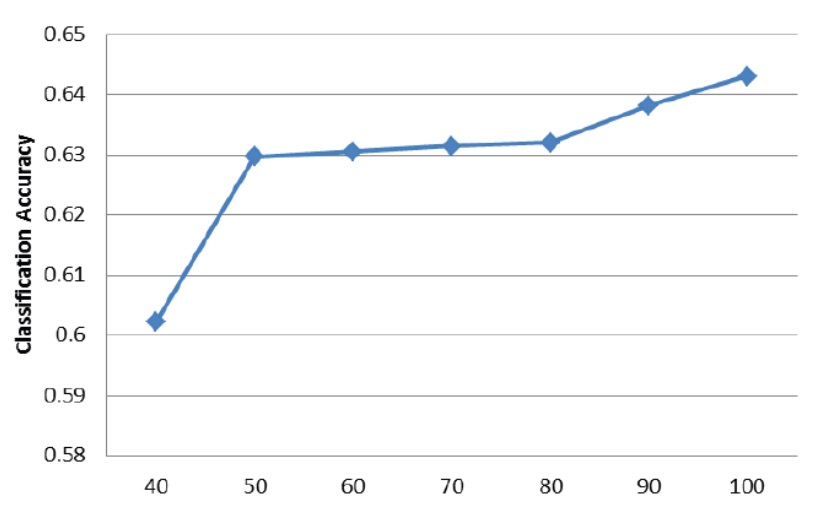

Results