Egocentric Future Localization

Hyun Soo Park, Jyh-Jing Hwang, Yedong Niu, and Jianbo Shi

University of Pennsylvania

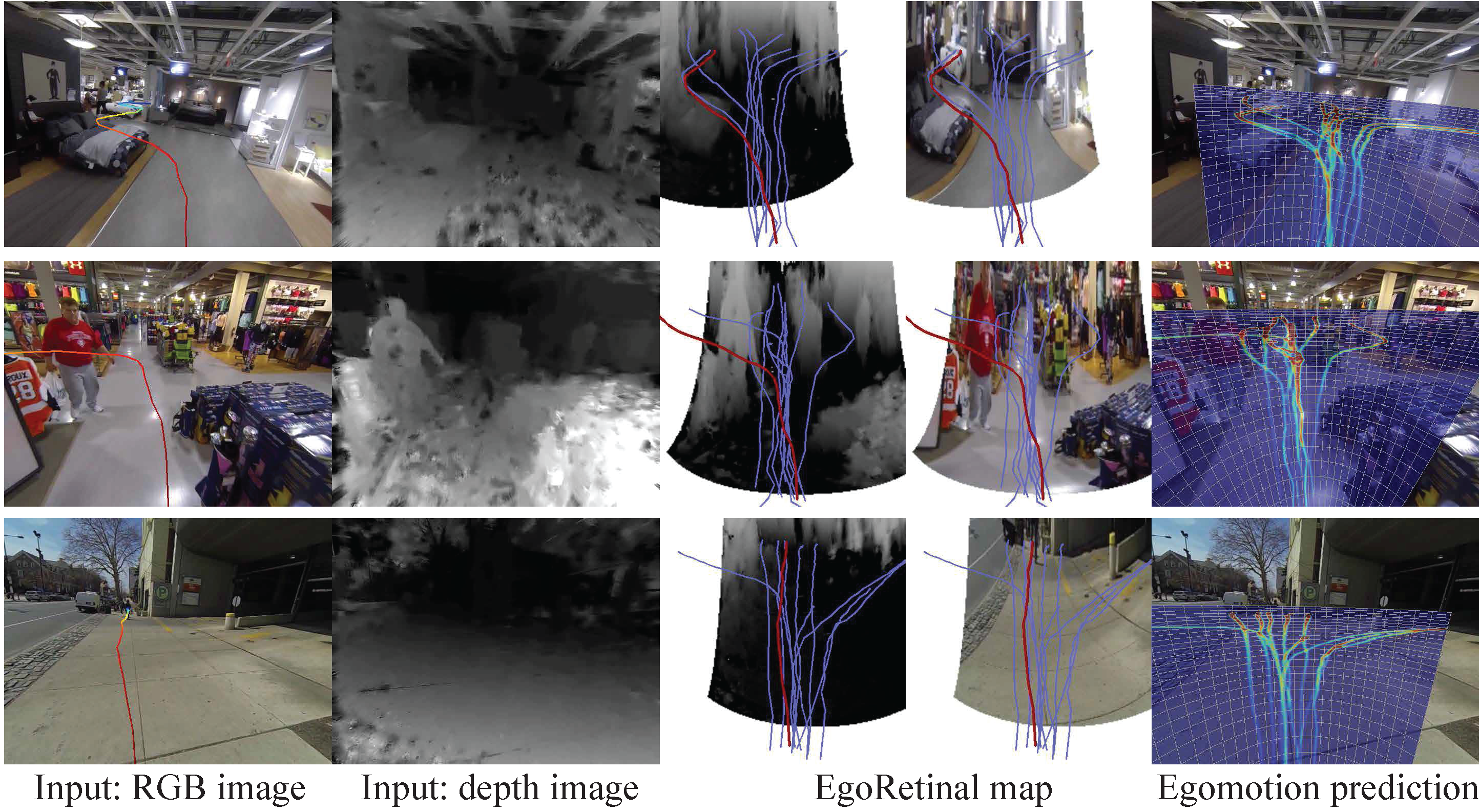

Figure 1. Where am I supposed to be after 5, 10, and 15 seconds? We present a method to predict a set of plausible future trajectories given a pair of egocentric stereo images using EgoRetinal map, a trajectory configuration space around a person.

Abstract

We presents a method for future localization: to predict plausible future trajectories of ego-motion in egocentric stereo images. Our paths avoid obstacles, move between objects, even turn around a corner into space behind objects. As a byproduct of the predicted trajectories, we discover the empty space occluded by foreground objects. One key innovation is the creation of an EgoRetinal map, akin to an illustrated tourist map, that `rearranges' pixels taking into accounts depth information, the ground plane, and body motion direction, so that it allows motion planning and perception of objects on one image space. We learn to plan trajectories {\em directly} on this EgoRetinal map using first person experience of walking around in a variety of scenes. In a testing phase, given an novel scene, we find multiple hypotheses of future trajectories from the learned experience. We refine them by minimizing a cost function that describes compatibility between the obstacles in the EgoRetinal map and trajectories. We quantitatively evaluate our method to show predictive validity and apply to various real world daily activities including walking, shopping, and social interactions.

Paper

Hyun Soo Park, Jyh-Jing Hwang, Yedong Niu, and Jianbo Shi "Egocentric Future Localization" Conference on Computer Vision and Pattern Recognition (CVPR) (oral), 2016, [paper, slide, bib, dataset]

Video

video download (225 MB)